Researchers have long established that racial biases held by customers and customer service agents can impact business transactions. But does that bias extend to chatbots?

New research from the University of Georgia found that customers perceived chatbot avatars differently than they perceived human customer service agents of the same race.

In a paper published in January in the Journal of the Association for Consumer Research Nicole Davis, a third-year doctoral student in marketing at Terry College of Business, explains that consumers’ perceptions of Black chatbots were different than their own perceptions of Black individuals.

They rated the Black chatbots more competent than white or Asian chatbots even if they expressed negative stereotypes of Black people in general.

“We found that in the digital space, because Black AI is so unusual, stereotypes amplified in the opposite direction,” Davis said. “The Black bots were not just seen as competent, but really competent — more competent than the white or Asian bots.”

Understanding how racial stereotypes interact with artificial intelligence is an important step toward the ethical use of AI, Davis said. As AI and bots grow in popularity, businesses must understand these nuances to not only support their bottom line but also to navigate decreasing racial bias.

The study looked at perceptions of competence and humanness. These are important factors in the digital space, Davis said, as they can influence how a customer feels and experiences a business.

To gauge preexisting opinions, participants were asked about common stereotypes for white, Black and Asian racial groups. Many responses aligned with past research: Black people were perceived as less competent than white people, and Asian people were seen as most competent, Davis said.

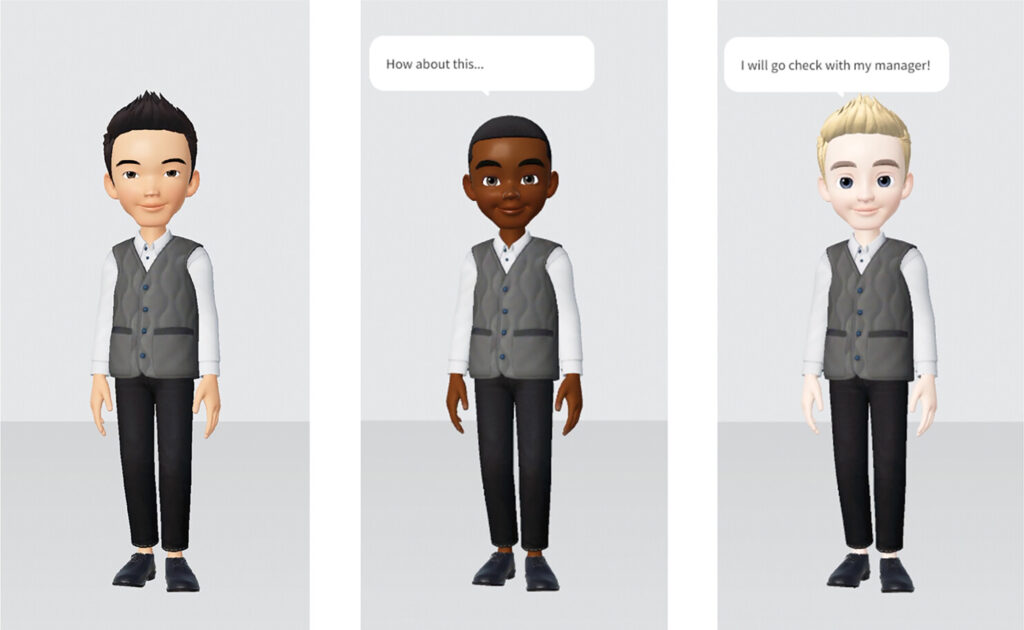

Afterward, participants were randomly assigned a bot that appeared white, Black or Asian and then negotiated with the bot to reduce the cost of a vacation rental. They could end negotiations at any time, and afterward, they were asked about the bot’s competence, warmth and humanness.

“Regardless of how the participants identified or which bot they interacted with, we still found that Black people were generally perceived as less competent than white or Asian people,” Davis said. “But then when we asked about the bot, we saw perceptions change. Even if they said, ‘Yes, I feel like Black people are less competent,’ they also said, ‘Yes, I feel like the Black AI was more competent.’”

The reason for this difference, Davis said, is known as expectation violation theory. This theory proposes that if expectations are low — due to something like stereotypes — but the experience is positive, then people reflect on their experience as overwhelmingly positive.

“Participants think, ‘Oh, wow, not only is there a Black bot in the digital space, but they actually did really well,’” Davis said. “Their expectations are exceeded, and their positive responses are amplified. And that’s why we find that the Black bots are perceived to be higher in competence and more human than the white or Asian bots.”

These findings are one indicator that stereotypes that are applied in human interactions may function differently in digital spaces, Davis said.

“This is very important as marketers design highly dynamic, anthropomorphic bots to serve as a front door to their firm or business,” Davis said. “We need more research on AI to understand how it is impacting consumer perception, as well as when AI is going to help versus when it could hurt.”